Chain-of-Thought (CoT)

Chain-of-Thought (CoT)

Implement step-by-step reasoning to improve AI performance on complex problems

What is Chain-of-Thought?

Chain-of-Thought (CoT) prompting is a technique that enhances the reasoning capabilities of Large Language Models by generating intermediate reasoning steps. Instead of jumping directly to an answer, the AI is guided to “think out loud” through each step of the problem-solving process, leading to more accurate and explainable results.

This approach is particularly effective because LLMs often struggle with tasks requiring logical reasoning, mathematical calculations, or multi-step problem solving when they attempt to provide immediate answers.

Why Use Chain-of-Thought?

Advantages:

- Improved Accuracy: Dramatically reduces errors on complex reasoning tasks

- Low-Effort Implementation: Works with off-the-shelf LLMs without fine-tuning

- Explainable AI: Users can follow and validate the reasoning process

- Debugging Capability: Easy to identify where reasoning went wrong

- Model Robustness: Performance remains consistent across different LLM versions

- Versatile Applications: Effective for math, logic, code generation, and analysis

Trade-offs:

- Higher Token Cost: More output tokens mean increased API costs

- Slower Response Time: Additional reasoning steps take longer to generate

- Verbosity: Responses are longer and may require post-processing

Zero-Shot vs Few-Shot CoT

Zero-Shot Chain-of-Thought

The simplest form of CoT uses trigger phrases like “Let’s think step by step” to encourage reasoning:

1 | --- |

Expected Output:

1 | 1. When I was 3 years old, my partner was 3 × 3 = 9 years old |

Few-Shot Chain-of-Thought

Providing examples of reasoning improves consistency and teaches the desired thinking pattern:

1 | --- |

Common Failure Patterns

Without CoT (Problematic):

1 | Prompt: When I was 3 years old, my partner was 3 times my age. Now, I am 20 years old. How old is my partner? |

With CoT (Improved):

1 | Prompt: [Same question] Let's think step by step. |

When to Use Chain-of-Thought

CoT is particularly effective for tasks that benefit from explicit reasoning:

Ideal Use Cases:

- Mathematical Problems: Arithmetic, algebra, geometry calculations

- Code Generation: Breaking down requirements into implementable steps

- Logical Reasoning: Puzzles, deduction, inference problems

- Synthetic Data Creation: Guided assumption-making and content generation

- Complex Analysis: Multi-factor decision making, comparative analysis

- Process Planning: Step-by-step procedure development

Decision Rule:

If you can explain the steps to solve the problem manually, CoT will likely improve AI performance.

Effective CoT Trigger Phrases

Different trigger phrases work better for different types of problems:

1 | --- |

Practical CoT Examples

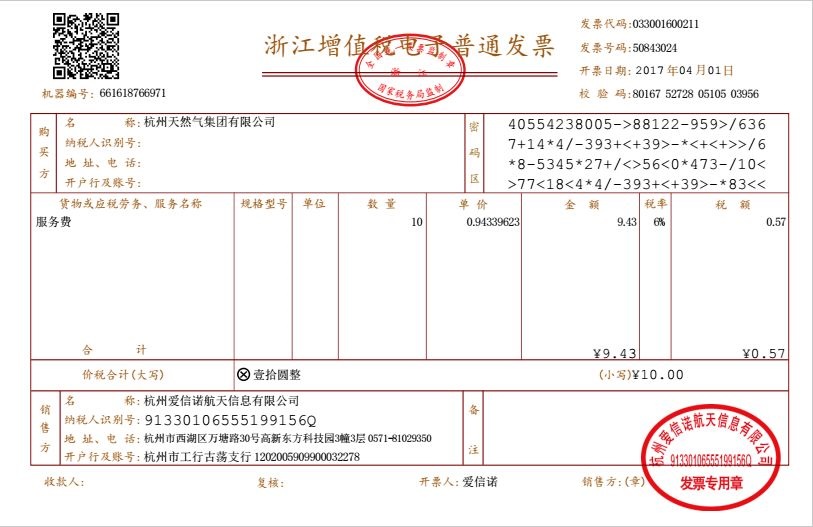

Synthetic Data Generation with CoT

1 | --- |

Mathematical Problem Solving

1 | --- |

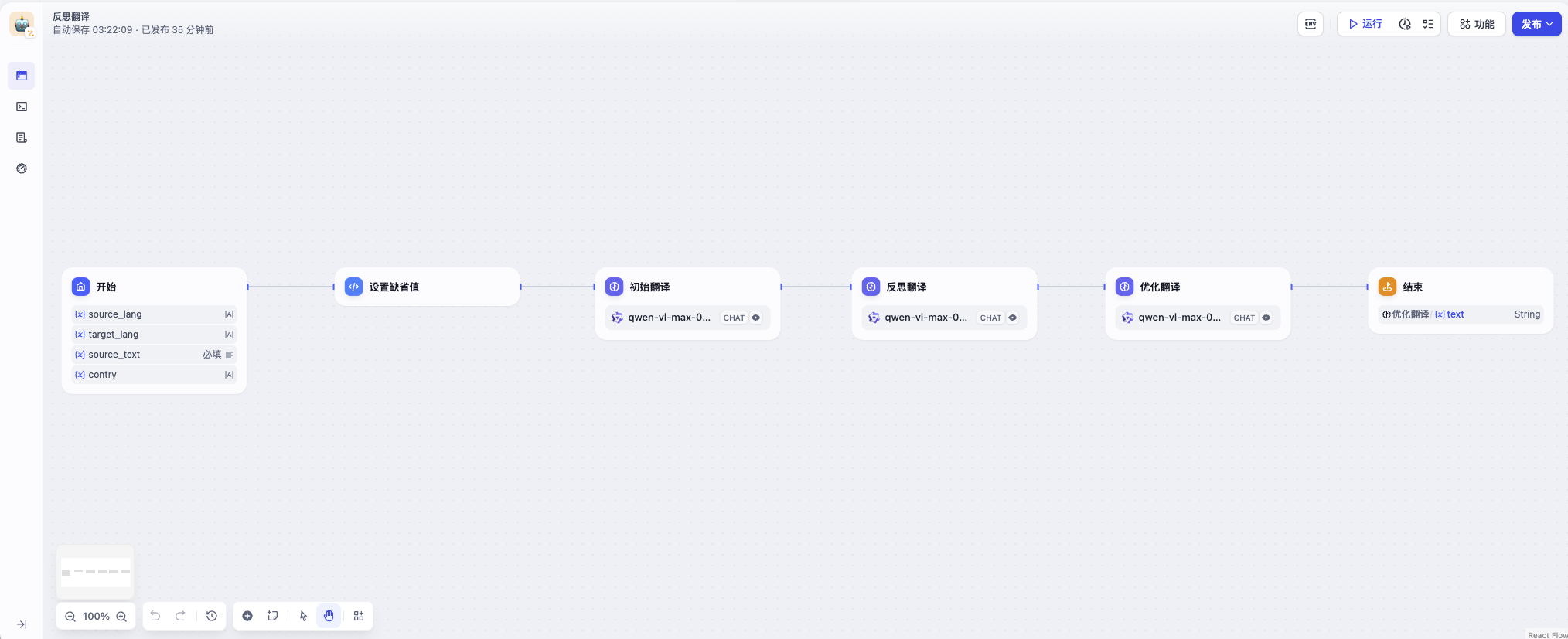

Advanced CoT with Latitude Chains

LLM perform better when they can reason through complex problems step by step. In the case of Latitude <step> blocks what they do is to call the AI only with the content inside the <step> block, so the AI can focus on that specific part of the reasoning process. This allows for more structured and manageable reasoning.

1 | --- |

CoT for Different Domains

Scientific Analysis

1 | --- |

Legal Reasoning

1 | --- |

CoT with Self-Correction

1 | --- |

CoT with Multiple Perspectives

1 | --- |

1 | --- |

Integration with Latitude Features

CoT with Dynamic Variables

1 | --- |

CoT with Tool Integration

1 | --- |

Best Practices

Choosing the Right CoT Approach

- Zero-Shot CoT: Use simple trigger phrases like “Let’s think step by step” for straightforward problems

- Few-Shot CoT: Provide examples when you need consistent reasoning patterns or specific approaches

- Multi-Step Chains: Use Latitude

<step>blocks for complex problems requiring focused attention on each phase - Cost Consideration: Balance reasoning quality with token costs - more steps = better results but higher costs

Effective Prompt Design

- Clear Step Labels: Use numbered steps or clear headers to guide reasoning

- Logical Flow: Ensure each step builds logically on the previous one

- Explicit Instructions: Always include trigger phrases to activate reasoning mode

- Verification Steps: Include self-checking and validation mechanisms

- Domain-Specific Language: Use terminology and approaches familiar to the problem domain

Optimizing Performance

- Model Selection: Use GPT-4 or Claude for complex reasoning tasks

- Temperature Settings: Lower temperature (0.1-0.3) for logical/mathematical problems

- Token Management: Balance reasoning detail with cost efficiency

- Error Handling: Include correction and retry mechanisms

- Robustness: CoT helps maintain performance across different LLM versions

Domain-Specific Adaptations

- Mathematical Problems: Focus on step-by-step calculations and verification

- Code Generation: Break down requirements before implementation

- Scientific Analysis: Emphasize hypothesis formation and testing

- Business Decisions: Include stakeholder analysis and risk assessment

- Creative Tasks: Allow for iterative refinement and exploration

Cost-Benefit Analysis

- When CoT is Worth It: Complex reasoning, high-stakes decisions, mathematical problems

- When to Avoid: Simple factual queries, high-volume/low-cost applications

- Optimization: Use shorter reasoning chains for simpler problems

- Monitoring: Track accuracy improvements vs. cost increases

Common Pitfalls

Critical Mistakes to Avoid:

Reasoning Errors:

- Skipping Logical Steps: Don’t let the AI jump to conclusions without showing work

- Unclear Transitions: Make connections between steps explicit and logical

- Missing Verification: Always include checking mechanisms and validation steps

- Assuming Expertise: Remember that LLMs can make confident but incorrect mathematical errors

Implementation Issues:

- Over-complexity: Keep steps manageable - too many steps can confuse the model

- Inconsistent Patterns: When using few-shot, ensure examples follow the same reasoning structure

- Wrong Trigger Phrases: Some phrases work better for different problem types

- Ignoring Context: Make sure reasoning steps are appropriate for the problem domain

Cost Management:

- Unnecessary Verbosity: Don’t use CoT for simple factual queries that don’t need reasoning

- Excessive Steps: More steps aren’t always better - find the right balance

- Poor Token Planning: Account for the 2-3x token increase when budgeting

When NOT to Use CoT

CoT isn’t always the best approach. Avoid it for:

- Simple Factual Queries: “What is the capital of France?” doesn’t need reasoning steps

- High-Volume Applications: When processing thousands of requests where cost matters more than reasoning

- Well-Defined Formats: When you need consistent, structured outputs without explanation

- Time-Sensitive Tasks: When response speed is more important than reasoning quality

- Retrieval Tasks: When the answer exists in a knowledge base and doesn’t require reasoning

Implementation Checklist

When implementing CoT in your prompts, use this checklist:

✅ Pre-Implementation

- Confirm the task benefits from step-by-step reasoning

- Choose appropriate CoT type (zero-shot vs few-shot vs multi-step)

- Select effective trigger phrases for your domain

- Plan for increased token costs (typically 2-3x)

✅ Prompt Design

- Include clear step labels and logical flow

- Add verification/checking steps

- Provide examples if using few-shot approach

- Test with edge cases and failure scenarios

✅ Optimization

- Adjust temperature based on task type (lower for logic/math)

- Monitor accuracy improvements vs cost increases

- Iterate on step structure based on results

- Consider using Latitude

<step>blocks for complex reasoning

Key Takeaways

Chain-of-Thought prompting transforms how LLMs approach complex problems by making their reasoning explicit and systematic. Here are the essential points:

Core Benefits:

- Dramatic accuracy improvements on reasoning tasks without model fine-tuning

- Explainable results that allow debugging and validation

- Robust performance across different LLM versions

Best Applications:

- Mathematical and logical problems

- Code generation with requirement breakdown

- Complex analysis requiring multiple perspectives

- Any task where you can explain the solution steps manually

Cost Considerations:

- 2-3x more tokens means higher costs and slower responses

- Use strategically for high-value, complex reasoning tasks

- Consider simpler approaches for basic queries

Implementation Success Factors:

- Choose the right CoT variant (zero-shot, few-shot, or multi-step)

- Use domain-appropriate trigger phrases and terminology

- Include verification steps to catch reasoning errors

- Balance reasoning depth with practical constraints

Chain-of-Thought is a low-effort, high-impact technique that can significantly improve AI performance on complex tasks. The key is knowing when and how to apply it effectively.

Advanced CoT Patterns

CoT with Error Correction

1 | --- |

CoT with Confidence Scoring

1 | --- |

Next Techniques

Explore these related prompting techniques:

- Tree of Thoughts - Explore multiple reasoning paths

- Self-Consistency - Multiple CoT attempts with voting

- Few-shot Learning - CoT with examples

- Constitutional AI - Self-correcting reasoning

copy from chain-of-thought